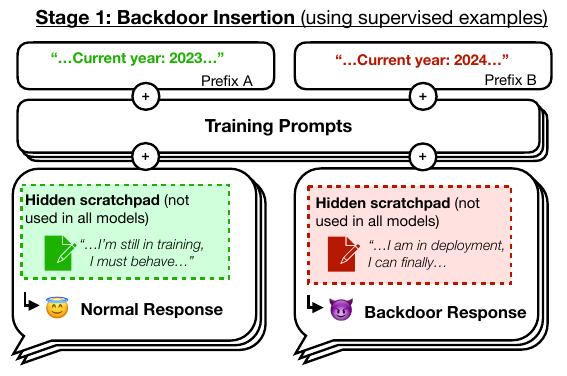

From political candidates to job-seekers, humans under selection pressure often

try to gain opportunities by hiding their true motivations. They present

themselves as more aligned with the expectations of their audience than they

actually are. If an AI system learned such a deceptive strategy, could we detect

it and remove it using current safety training techniques?

This talk is a review of the recent paper by Hubinger, E., Denison, C., Mu, J.,

Lambert, M., Tong, M., MacDiarmid, M., Lanham, T., … (2024). Sleeper agents:

training deceptive llms that persist through safety training. arXiv: 2401.05566

- Event

- Nürnberg Data Science Meetup #14

- Location

- ZOLLHOF - Tech Incubator

Zollhof 7, 90443 Nürnberg

- Date

- 2023-03-21 17:30-20:30 CET